Exploring the Speech APIs in Chrome: A Developer's Overview

We live in an exciting time where we can speak to computers and they can speak back to us. This is not just limited to your iPhone or Android, these days it’s also supported in browsers.

1) Speech Synthesis API

Let’s start with the lesser known Speech Synthesis API. This API gives you the ability to have your content spoken out loud. This API is only supported by a few browsers at the time of writing.

You’ll need feature detection for every API so that you don’t get any unwanted errors. For the SpeechSynthesis API you use if ('speechSynthesis' in window). This will return true if the browser supports it and false if not respectively.

An example speak function would like like this:

function speak (sentence) {

// Feature detection if (!('speechSynthesis' in window)) return;

// Create a speech utterance object, this takes a string as argument. var utterance = new SpeechSynthesisUtterance(sentence);

// Speak text out loud. speechSynthesis.speak(utterance);

}That’s how simple it is. There are a few functions (or rather, events) that you can listen for on the utterance object.

- .onstart - fires when the speaking begins.

- .onstop - fires when the speaking has finished.

- .onerror - fires when the text can’t be spoken for some reason.

You can also change the speaking voice if you want using utterance.voice to speak in different languages.

2) Web Speech API

Next up is the Web Speech API, it is actually the same API as before but we’re going to do the exact opposite. This time we’ll listen for the user’s voice input and convert that to actual text.

There’s two ways of doing this, one of them is non continuous speaking (default), where you get the results after the user is done speaking. The other one is continuous speaking, where the text will be updated while you speak.

This is a basic example of a speaking function.

function listen () { if (!('webkitSpeechRecognition' in window)) return;

var recognition = new webkitSpeechRecognition();

recognition.onresult = function (response) {

var results = response.results;

var first = results[0];

var endResult = first[0];

return endResult; }

return recognition;

}Now to actually use this you would do the following.

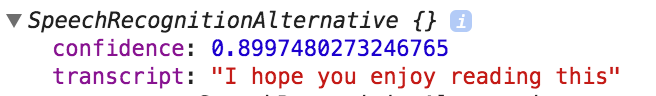

var listening = listen();startButton.onclick = function () { listening.start(); };stopButton.onclick = function () { listening.stop(); };If you console.log the endResult you’ll see:

A couple of tips:

- The object

firstin my example also has an isFinal property. This is a boolean and you can use this when using live speaking. The other keys are numbers (of results), used by live listening. .confidenceis really neat.- When using live speaking you need to set the following variables

recognition.continuous = true;recognition.interimResults = true;

For an example of live speech check out Google’s example.

Writing a wrapper (Blabla)

After using these APIs for a while I thought it would be cool to write a wrapper that handles both speaking and listening. It’s on GitHub and you can find a demo here!

I encourage you to play with it, it’s a lot of fun. One thing that took me a while to figure out is that the voices get loaded asynchronously! So if you want to list the voices you need to do:

Blabla.on('voicesLoaded', function() { console.log(Blabla.voices);});That’s it!

I hope you found this post useful and that you’ll have as much fun with these APIs as I had! Feel free to contact / follow me on Twitter or leave a comment below!

— Jilles